The GeForce RTX 4090 is the latest and most powerful graphics card from Nvidia, released in January 2023. It features a new architecture called Ada, which improves the performance, efficiency, and AI capabilities of the card. It also has a massive 24 GB of GDDR6X memory, a boost clock of 2.4 GHz, and a TDP of 450 W.

The GeForce RTX 4090 is designed for enthusiasts who want the best possible performance for 4K gaming with ray tracing, VR, and content creation. It can handle any game or application at ultra settings with high frame rates and stunning visuals. However, it also comes with a steep price tag of $1,600 and requires a powerful PC system to support it.

The GeForce RTX 4090 has many advantages over other graphics cards, such as:

- It is the fastest GPU on the market, beating all other competitors by a large margin in both gaming and content creation.

- It is the best GPU for ray tracing, offering stunning lighting and reflections in games that support this feature. It is also much faster than other GPUs at ray tracing, thanks to its improved RT cores.

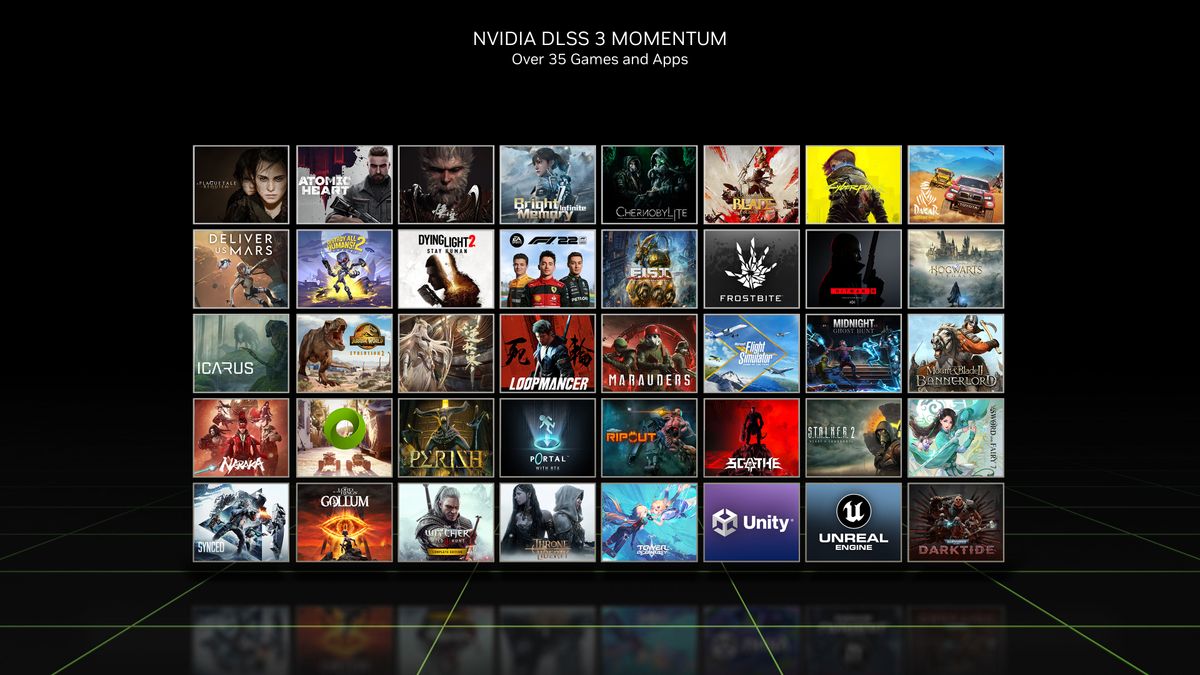

- It supports DLSS 3.0, a new version of Nvidia’s AI-powered upscaling technology that enhances the image quality and performance of games. DLSS 3.0 is much better than the previous versions and also beats AMD’s FSR 2.0, which is a similar technology.

- It has a large memory capacity of 24 GB of GDDR6X, which allows it to handle high-resolution textures, complex scenes, and large datasets without any issues.

- It has a new architecture called Ada, which improves the efficiency, power consumption, and AI capabilities of the GPU. Ada also introduces new features, such as mesh shading, variable rate shading, sampler feedback, and more.

GeForce RTX 4090 shortcomings:

However, the GeForce RTX 4090 also has some disadvantages, such as:

- It is very expensive, costing $1,600 for the Founders Edition and even more for the custom models from other manufacturers.

- It is very power-hungry, requiring a 450 W TDP and a high-quality PSU to run it. It also generates a lot of heat and noise, which may require a good cooling system.

- It is very hard to find, due to high demand and low supply. The GPU market is still affected by the global chip shortage, scalpers, miners, and gamers who want to get their hands on this card.

- It is overkill for most gamers, especially those who play at lower resolutions or don’t care about ray tracing or DLSS. The RTX 4090 offers more performance than most games or applications can use or need.

In the realm of cutting-edge graphics technology, the Nvidia GeForce RTX 4090 stands as an unrivaled masterpiece. This revolutionary graphics card not only redefines the boundaries of visual excellence but also propels the gaming and creative experience to uncharted heights. At [Your Website Name], we delve into the intricacies of the Nvidia GeForce RTX 4090, uncovering its remarkable features, awe-inspiring performance, and the unparalleled advantage it bestows upon enthusiasts and professionals alike.

The Apex of Innovation: NVIDIA GeForce RTX 4090’s Features

Ray-Tracing Redefined

The Nvidia GeForce RTX 4090 introduces an era of unparalleled realism with its groundbreaking ray-tracing capabilities. Rendered scenes attain a level of lifelike authenticity that was once deemed unattainable. The dedicated ray-tracing cores work harmoniously to simulate how light interacts with objects, resulting in breathtaking visuals that blur the line between virtual and reality.

Gigantic VRAM Capacity

Equipped with a colossal VRAM capacity, the Nvidia GeForce RTX 4090 ensures seamless handling of even the most demanding tasks. With a mind-boggling [VRAM Capacity]GB of GDDR6X memory, creators and gamers alike can indulge in expansive worlds, intricate designs, and intricate simulations without compromise.

AI-Powered Tensor Cores

Harnessing the prowess of AI-driven technology, the Tensor Cores within the Nvidia GeForce RTX 4090 deliver an astonishing leap in performance. Tasks that once required extensive processing time are now expedited, thanks to real-time AI processing. From content creation to real-time in-game optimization, the Tensor Cores elevate efficiency to unprecedented levels.

Unbridled Performance: Unleashing the Full Potential

Gaming Domination

Embrace unrivaled gaming supremacy with the Nvidia GeForce RTX 4090. Boasting a clock speed of [Clock Speed] MHz and [CUDA Core Count] CUDA cores, this graphics card eradicates latency and lag, immersing gamers in a world of rapid-fire responsiveness. As a result, frame rates soar, graphics remain impeccable, and victory becomes the only option.

Creative Brilliance

For content creators, the Nvidia GeForce RTX 4090 opens doors to limitless possibilities. The immense VRAM capacity, coupled with the AI-powered Tensor Cores, enables smooth 8K video editing, intricate 3D rendering, and complex simulations. Designers and creators can bring their visions to life with unparalleled accuracy, as each detail is intricately preserved.

Charting the Unexplored: Pushing Boundaries with Nvidia GeForce RTX 4090

The Verdict: Embrace the Future of Graphics

| Graphics Card | RTX 4090 | RTX 3090 Ti | RTX 3090 | RTX 3080 Ti | RX 6950 XT | Arc A770 16GB |

|---|---|---|---|---|---|---|

| Architecture | AD102 | GA102 | GA102 | GA102 | Navi 21 | ACM-G10 |

| Process Technology | TSMC 4N | Samsung 8N | Samsung 8N | Samsung 8N | TSMC N7 | TSMC N6 |

| Transistors (Billion) | 76.3 | 28.3 | 28.3 | 28.3 | 26.8 | 21.7 |

| Die size (mm^2) | 608.4 | 628.4 | 628.4 | 628.4 | 519 | 406 |

| SMs / CUs / Xe-Cores | 128 | 84 | 82 | 80 | 80 | 32 |

| GPU Shaders | 16384 | 10752 | 10496 | 10240 | 5120 | 4096 |

| Tensor Cores | 512 | 336 | 328 | 320 | N/A | 512 |

| Ray Tracing “Cores” | 128 | 84 | 82 | 80 | 80 | 32 |

| Boost Clock (MHz) | 2520 | 1860 | 1695 | 1665 | 2310 | 2100 |

| VRAM Speed (Gbps) | 21 | 21 | 19.5 | 19 | 18 | 17.5 |

| VRAM (GB) | 24 | 24 | 24 | 12 | 16 | 16 |

| VRAM Bus Width | 384 | 384 | 384 | 384 | 256 | 256 |

| L2 / Infinity Cache | 72 | 6 | 6 | 6 | 128 | 16 |

| ROPs | 176 | 112 | 112 | 112 | 128 | 128 |

| TMUs | 512 | 336 | 328 | 320 | 320 | 256 |

| TFLOPS FP32 | 82.6 | 40 | 35.6 | 34.1 | 23.7 | 17.2 |

| TFLOPS FP16 (FP8/INT8) | 661 (1321) | 160 (320) | 142 (285) | 136 (273) | 47.4 | 138 (275) |

| Bandwidth (GBps) | 1008 | 1008 | 936 | 912 | 576 | 560 |

| TDP (watts) | 450 | 450 | 350 | 350 | 335 | 225 |

| Launch Date | Oct 2022 | Mar 2022 | Sep 2020 | Jun 2021 | May 2022 | Oct 2022 |

| Launch Price | $1,599 | $1,999 | $1,499 | $1,199 | $1,099 | $349 |

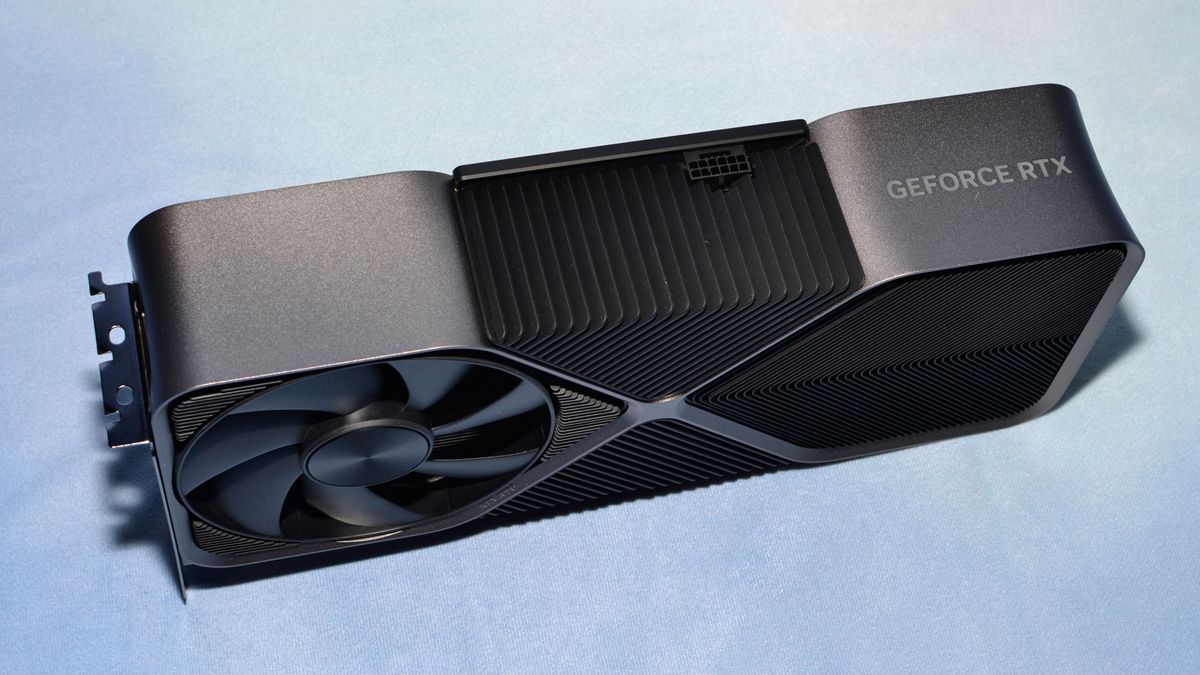

Nvidia’s incarnation of the GeForce RTX 4090 Founders Edition appears ostensibly akin to its predecessor, the RTX 3090 Founders Edition. However, beneath the surface lie several discreet modifications. To begin, the novel card boasts a modest increase in thickness and a somewhat abbreviated length — its dimensions span 304x137x61mm in contrast to the 3090’s measurements of 313x138x57mm. Weight, albeit imperceptibly, remains consistent at 2186g, juxtaposed with the 2189g burden of the antecedent iteration.

Aesthetically, the newfound RTX 4090 possesses a slightly concave configuration for its outer chassis framework. This divergence becomes evident in the accompanying assemblage of images, wherein we juxtapose the 3090 and 4090 cards side by side. Notably, the alterations manifest a nuanced purpose rather than purely embellishing the visual aspects.

Nvidia upholds the tradition of dual fans in this rendition: one draws in air across the heatsink positioned at the card’s rear, while the other propels air through the fins, expelling it via the exhaust port surrounding the video connectors. The contemporary fans measure 115mm in diameter, a departure from the previous generation’s 110mm, and exhibit a marginally smaller central hub (38mm compared to the prior 42mm). Nvidia asserts that the fans augur a 20% enhancement in airflow, presumably at identical velocities.

Power dynamics have also undergone transformation over the past biennium. Nvidia forged ahead with the advent of the 12-pin power connector alongside the RTX 30-series. This connector harmonizes with the new PCIe 5.0 12VHPWR 16-pin counterpart, omitting only the supplementary four sense pins. In the case of the 4090, the 16-pin connector aligns itself parallel to the PCB, diverging from the perpendicular disposition of the 3090’s 12-pin connector.

Concludingly, in a departure from the inclusion of a dual 8-pin-to-12-pin adapter in the RTX 3090 package, the RTX 4090 emerges with a quad 8-pin-to-16-pin adapter. Remarkably, this identical adapter seems to be a standard inclusion across the gamut of RTX 4090 models offered by Nvidia’s associates.

In terms of things that definitely haven’t changed, the RTX 4090 still comes with three DisplayPort 1.4a outputs and a single HDMI 2.1 output. We still haven’t seen any DisplayPort 2.0 monitors, which might be part of the reason for not upgrading the outputs, but it’s still a bit odd as the DP 2.0 standard has been finalized since 2019.

Similarly, the PCIe x16 slot sticks with the PCIe 4.0 standard rather than upgrading to PCIe 5.0. That’s probably not a big deal, especially since multi-GPU support in games has all but vanished. We’d argue that the frame generator and DLSS 3 also make multi-GPU basically unnecessary. To that end, Nvidia has also removed NVLink support from the AD102 GPU and the RTX 40-series cards. Yes, we can effectively declare that SLI is now dead, or at the very least it’s lying dormant in a coma.

Test Setup for GeForce RTX 4090

Our GPU test PC and gaming suite received an overhaul in early 2022, and we maintain the utilization of the same hardware configuration for the present moment. While AMD’s Ryzen 9 7950X might showcase a marginal uptick in speed, our utilization of XMP confers a restrained escalation in performance. Presently, we’re not unduly preoccupied with a minuscule percentage increase in frame rates. Nevertheless, if your intention revolves around procuring an RTX 4090, it becomes imperative to opt for, at the very least, a Core i9-12900K akin to our own configuration. Alternatively, one might consider the imminent Core i9-13900K or the previously mentioned 7950X for optimal results.

Sitting at the heart of our setup is our CPU, nestled within the MSI Pro Z690-A DDR4 WiFi motherboard, accompanied by DDR4-3600 memory — a choice rooted in practicality rather than a sole pursuit of maximal performance. The presence of a Crucial P5 Plus 2TB SSD seems ostensibly capacious, yet in the face of the expanding girth of modern games, it is beginning to embrace a status of being merely sufficient. Our transition to Windows 11 is complete, and we’re presently operating on the latest 22H2 iteration (with VBS and HVCI deactivated), a strategic maneuver to extract the utmost potential from Alder Lake. The details of the remaining hardware components are laid out in the accompanying boxout.

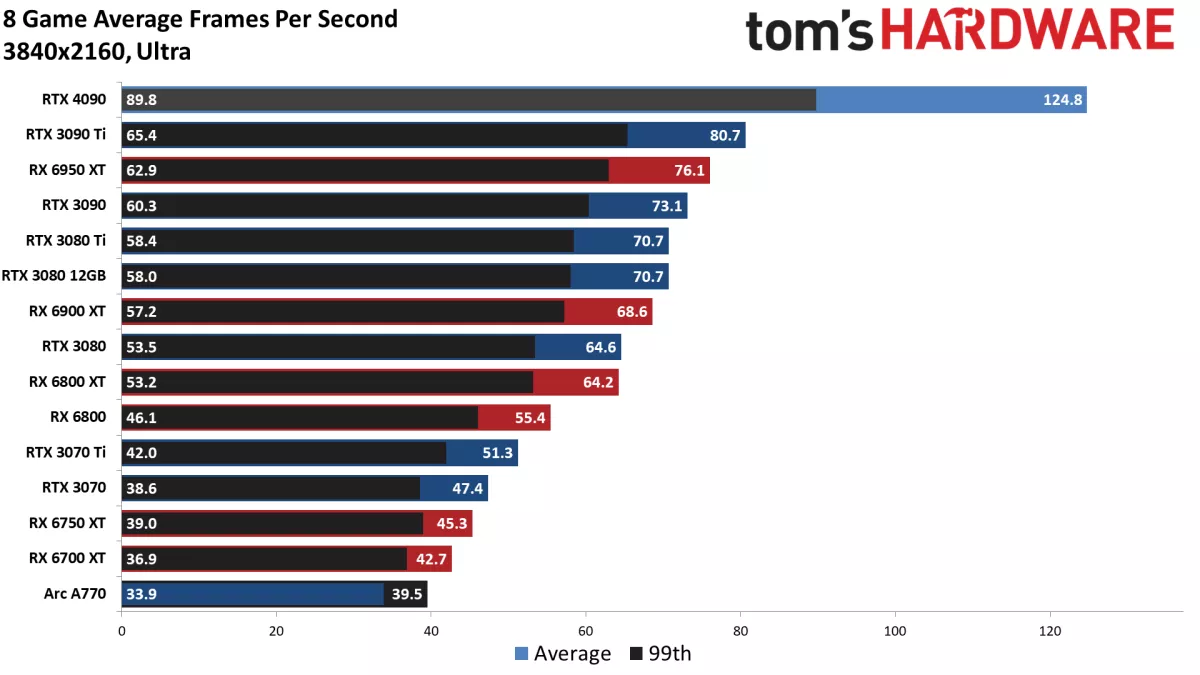

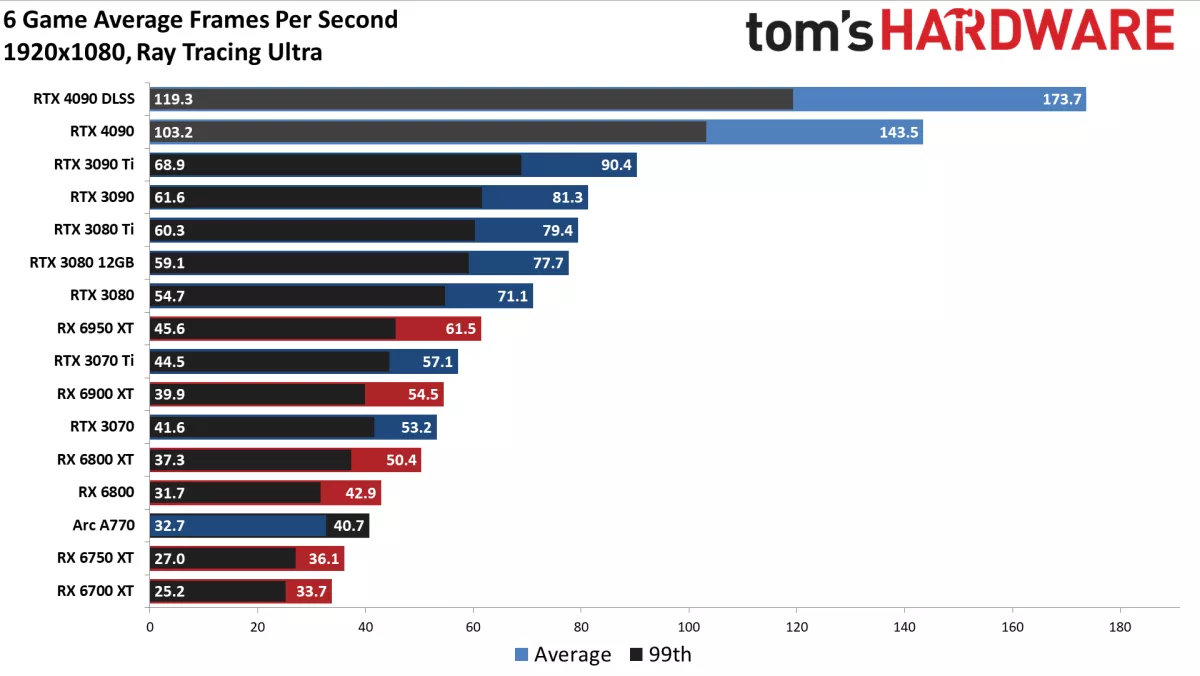

Our battery of gaming tests comprises a “standard” collection of eight games that eschew ray tracing (even if the respective game accommodates it), along with a distinct “ray tracing” ensemble of six titles, all of which harness multiple RT effects. The RTX 4090 underwent comprehensive evaluation at our customary settings, although our scrutiny centers predominantly on 4K performance. Concurrently, 1440p stands as a graduated option, catered to those considering the allure of the forthcoming 1440p 360 Hz displays as an alternative to the allure of a 4K 144 Hz panel.

The trial run at 1080p was indeed conducted, yet its significance remains elusive unless one’s gaming pursuit aligns with titles that embrace ray tracing technology. Across the spectrum, numerous games already grappled with a predominant CPU-bound scenario at 1080p when paired with the RTX 3090 Ti and RX 6950 XT. The RTX 4090, with its far-reaching impact, repositions the performance gauge to such an extent that the disparity in overall performance between 1080p ultra and 1440p ultra configurations barely breaches the 5% threshold. In our investigation, we also engaged the 4090 in conjunction with DLSS 2 Quality mode across the ten games that offer compatibility.

Apart from the gaming evaluations, our repertoire encompasses an array of benchmarks geared toward professional and content creation pursuits, capitalizing on the GPU’s prowess. SPECviewperf 2020 v3, Blender 3.30, OTOY OctaneBenchmark, and V-Ray Benchmark constitute the tools in our arsenal for these endeavors.

In addition, the Ada Lovelace and RTX 40-series GPUs usher in a plethora of novel attributes, warranting an exploration of DLSS 3 within the confines of preview builds from various games supplied by Nvidia. It’s worth noting that, at present, DLSS 3 exclusively supports the RTX 4090, obviating the possibility of head-to-head comparisons with alternative GPUs. Our scrutiny extends to gauging performance metrics and latency peculiarities across an array of elite graphics cards using the preview iteration of Cyberpunk 2077. The indispensability of Nvidia GPUs becomes evident in this context, given their requisite role in facilitating Reflex support, an imperative facet for the operation of Nvidia’s FrameView utility, tasked with capturing latency data.

GeForce RTX 4090: Gaming Performance

Let us commence our exploration with the quintessential performance metric: the pinnacle of 4K output with settings cranked to the maximum. It warrants emphasis that if one’s gaming horizon aligns with a 1080p monitor — even one that boasts an exceptionally high refresh rate — the RTX 4090 is poised to transcend the boundaries of necessity. This assertion finds itself less applicable in instances where the gameplay entails a profusion of ray-tracing effects, a subject that we will delve into shortly.

Let’s momentarily suspend our discourse as we navigate through the nine comprehensive charts — it’s prudent to peruse not just the overarching averages! However, should you opt to solely scrutinize the average figures, you’ll promptly discern that the RTX 4090 ushers in a staggering 55% leap in performance over its precursor, the RTX 3090 Ti, which debuted a mere six months prior. For those who recently acquired a 3090 Ti, this revelation might induce a palpable pang of regret. The savings of $500 notwithstanding, if you’re in the market for a GPU whose valuation stretches to $1,000 or beyond, we are of the conviction that this caliber of performance substantiates the expenditure.

Broaching the subject of alternative GPUs, an impressive 71% leap is unveiled when juxtaposed with the RTX 3090 Founders Edition from the preceding generation, further escalating to a slightly grander 77% enhancement in contrast to the RTX 3080 Ti. Pitted against AMD’s premier contender, the RX 6950 XT, within our conventional gaming suite that refrains from tapping into a substantial portion of the Ada Lovelace architecture’s supplementary attributes, a substantial 64% progress is discerned. The magnitude of this leap looms considerable and potentially situates Nvidia’s raw prowess beyond the realm of AMD’s impending RDNA 3 GPUs. The unfolding of events over the next month or so will unveil AMD’s position, yet this instance underscores Nvidia’s resolute challenge to its competition.

An essential observation lies in the fact that the 55% average encompasses games that continue to grapple with CPU bottlenecks, exemplified by titles like Flight Simulator. An intriguing detail emerges as the RTX 4090’s performance scarcely wanes as one transitions from 1080p ultra to 1440p ultra and onward to 4K ultra. This very facet underscores the allure of Nvidia’s DLSS 3 Frame Generation technology, a realm we will delve into shortly.

Within the realm of the eight individual test outcomes, the RTX 4090 consistently outpaces the 3090 Ti by margins ranging from 11% (Flight Simulator) to an astonishing 112% (Total War: Warhammer 3). These extremes, however, yield to a more consolidated range in the other six games, stretching from 46% (Far Cry 6, Red Dead Redemption 2) to 70% (Forza Horizon 5).

It’s worth highlighting the impact of DLSS 2 in Quality mode on performance within the quartet of games that accommodate its integration. A 4% decrement in Flight Simulator performance surfaces, once again underscoring the influence of CPU limitations. Horizon Zero Dawn registers a modest 10% upswing, while Watch Dogs Legion garners a 13% surge. Meanwhile, Red Dead Redemption’s performance witnesses a commendable 14% augmentation. Notably, the RTX 3090 Ti reaped up to a 35% boost in performance with DLSS 2 Quality mode, further highlighting the salience of CPU constraints, even in the context of 4K gameplay.

Nonetheless, this card’s essence doesn’t chiefly lie in its proficiency with conventional rasterization rendering techniques. While it indisputably eclipses its predecessors in such games, its true mettle unfurls in the realm of elevated ray tracing implementations.

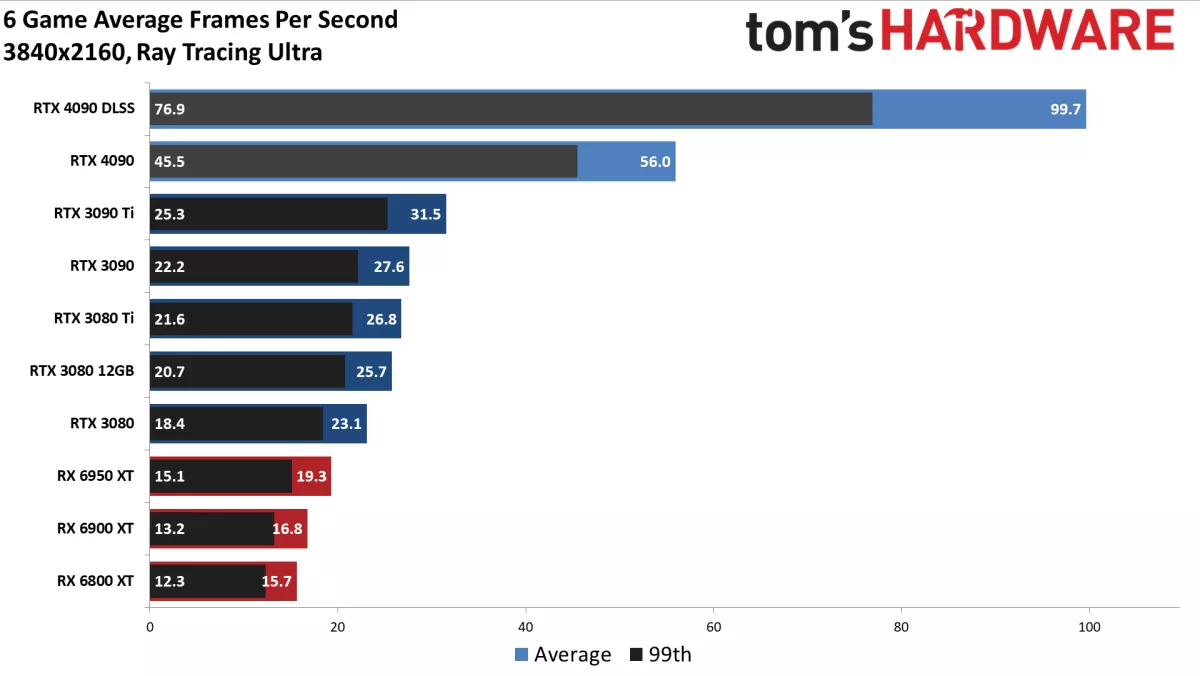

If the conventional gaming performance left you unimpressed, the ray tracing outcomes are sure to kindle your intrigue. At this juncture, we’re witnessing a remarkable 78% advancement over the RTX 3090 Ti of the prior generation, and astonishingly, this surge manifests within pre-existing games. Anticipate the advent of more intricate ray tracing titles, though it’s highly plausible that obtaining satisfactory performance from these games will necessitate the deployment of an RTX 40-series fortified with DLSS 3.

Turning our gaze to the realm of alternative GPUs, the 4090 surges ahead of AMD’s apex RX 6950 XT by a staggering 190% — a representation of nearly threefold the velocity. Moreover, its celerity exceeds that of both the RTX 3090 and the 3080 Ti, surpassing the latter by slightly over twofold. Furthermore, upon activating DLSS Quality mode upscaling, an endeavor feasible across all RTX cards, the 4090’s performance elevates by a commendable 78%. Consequently, the RTX 4090, executing the apex quality mode of DLSS, attains an approximation of fivefold the performance of the 6950 XT in the realm of demanding DXR-infused games.

The granular gaming charts, as is the norm, exhibit a spectrum of performance, albeit notably narrower compared to our customary rasterization benchmarks. This distinction stems from the fact that a majority of ray tracing-driven games tend to exert the GPU to its fullest extent, especially at 4K resolutions. Over the course of our evaluation of six games, the 4090’s advantage oscillated between 67% in the case of Control Ultimate Edition — incidentally, the earliest inclusion in our DXR suite — and a commanding 106% supremacy in Fortnite, with all the RT attributes cranked to their zenith.

Remarkably, even without the intervention of DLSS, the RTX 4090 all but accomplishes the feat of furnishing native 4K ultra ray tracing performance at or above the 60 fps threshold. Evidently, the performance comfortably resides within the realm of playability, consistently surging past 40 fps in all games. The augmentation facilitated by DLSS 2 Quality upscaling propels the average performance to the realm of 100 fps, rendering all DXR assessments well in excess of the 60 fps mark. Once more, we stand on the precipice of unearthing the potential of DLSS 3 through scrutiny of preview builds for upcoming games.

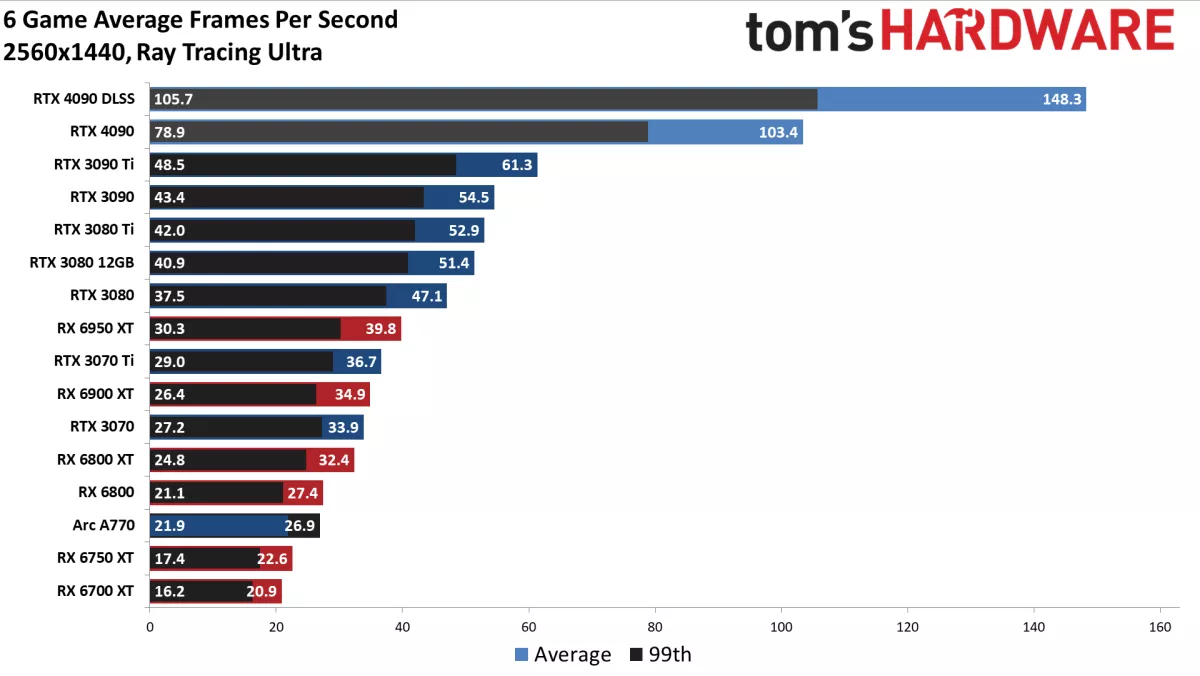

The RTX 4090 effectively elevates the status of 1440p ultra to a realm that was previously attributed to 1080p ultra. In simpler terms, the emergence of CPU and other systemic constraints now assumes a more pronounced role even at the 1440p resolution. While the 4090 maintains an average lead of 28% over the 3090 Ti and a 25% edge over the RX 6950 XT, it’s evident that it grapples with constriction. The DLSS outcomes further underscore this fact, wherein performance at its zenith aligns with the native outcome, while at its nadir, frame rates sustain a 5% dip.

A deeper dive into the individual game charts highlights a conspicuous variance in Flight Simulator’s performance — the 4090 trails behind the 3090 Ti by a margin of 10%. Ordinarily, CPU bottlenecks would exert an equal influence on all GPUs hailing from the same manufacturer. However, given Ada’s status as a novel architecture, it’s plausible that the drivers necessitate some refinement. This particular case showcases that the pathway paved by Ada isn’t as optimized as the mature Ampere architecture pathway.

This discrepancy remains exclusive to Flight Simulator; other games are not afflicted in the same manner. Nonetheless, Far Cry 6 and Horizon Zero Dawn now boast single-digit percentage advantages, while Watch Dogs Legion exhibits an average improvement of 23%.

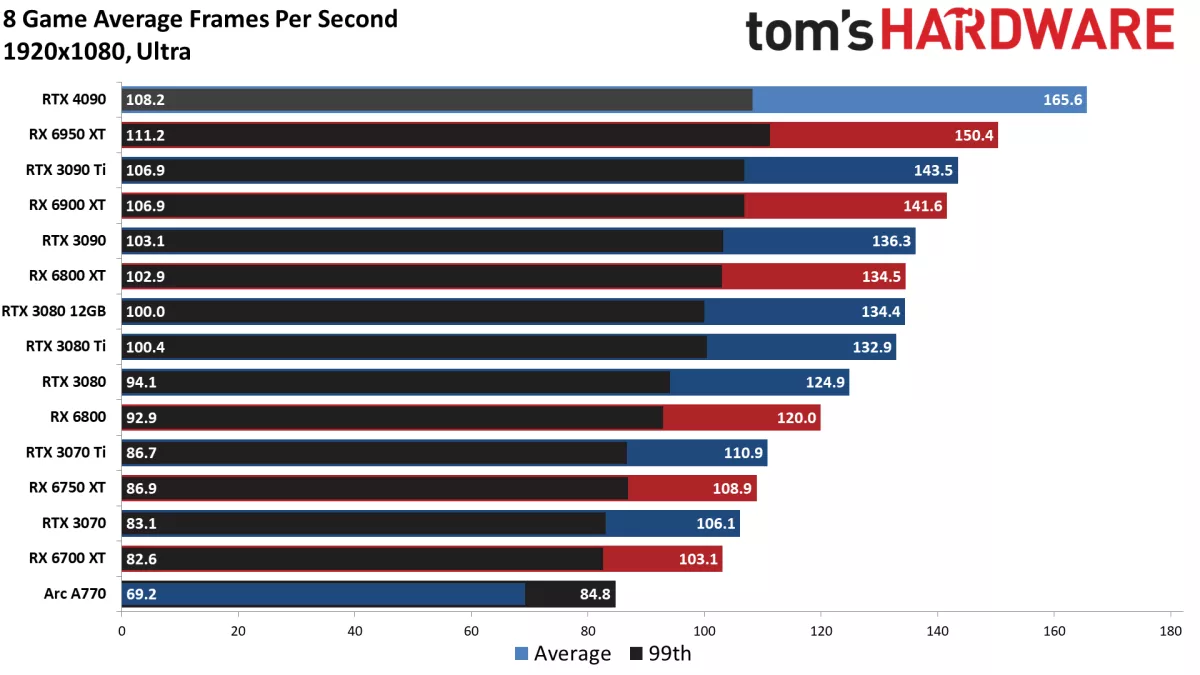

The outcomes stemming from the standard rasterization evaluations at 1080p ultra primarily serve as a testament to the fact that while some games manifest incremental performance gains, others unmistakably find themselves entangled within the confines of CPU-bound scenarios. In this landscape, the RTX 4090’s lead over the RTX 3090 Ti has contracted to a modest 16% on average, and intriguingly, a select couple of games (namely Far Cry 6 and Flight Simulator) yield superior performance on the preceding generation card.

Even AMD’s RX 6950 XT manages to narrow the divide, trailing the 4090 by a mere 9%, a noteworthy development. Both the 6950 XT and the 4090 boast ample caches, which historically rendered more significant benefits for AMD’s performance at 1080p compared to 4K. While Nvidia’s caching architecture follows a distinct trajectory, both GPUs encounter CPU bottlenecks. If we were to further scale down the workload to 1080p medium settings (a realm not exhibited here), the 6950 XT would emerge with a marginal 1% lead in aggregate performance.

The underlying message is clear: Acquiring an RTX 4090 for the exclusive purpose of gaming at 1080p is ill-advised. Quite frankly, it’s a decision that should be eschewed. Even if you’re armed with a 360 Hz gaming monitor, the likelihood is that you’d be compelled to compromise quality settings to bolster frame rates. In this scenario, the 4090’s superiority would scarcely transcend that of a prior-generation card.

Once again, the domain of ray tracing delivers more tempered advancements at 1080p, although the RTX 4090 sustains a resounding 59% superiority over the 3090 Ti in aggregate performance. This pertains to the most strenuous titles at present, though some titles conspicuously bear a less taxing disposition (Metro Exodus Enhanced Edition, we’re looking at you).

The RTX 4090’s dominion remains conspicuous as it continues to more than double the performance of the RX 6950 XT, underscoring AMD’s forthcoming transition from the RDNA 2 architecture. The forthcoming RDNA 3 architecture from AMD warrants attention, as it has the potential to bridge the existing performance chasm. Notably, Intel’s Arc A770, despite being endowed with a mere 32 RTUs in contrast to AMD’s 60 Ray Accelerators (40 on the 6750/6700), nearly aligns with the DXR performance of the RX 6800 and surpasses the RX 6750 XT.

DLSS yields an average 21% augmentation in performance at 1080p. It’s imperative to recognize that when DLSS is employed in Performance mode (utilizing 4x upscaling), the Nvidia GPUs render native 1080p content, which is subsequently upscaled to 4K. Although there is a minor performance compromise engendered by DLSS (affecting Ada Lovelace less profoundly than Ampere), the native 1080p outcomes offer a prescient preview of the trajectory anticipated from Nvidia’s DLSS Performance mode.

GeForce RTX 4090: DLSS 3, Latency, and ‘Pure’ Ray Tracing Performance

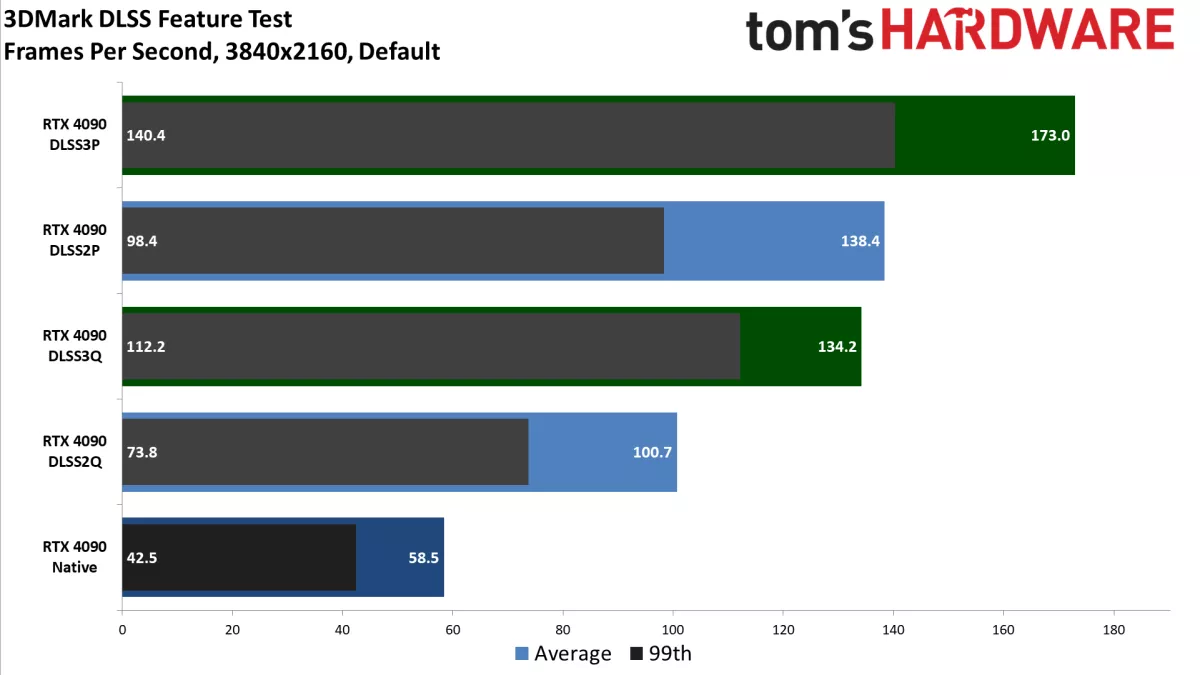

The Nvidia Ada Lovelace and RTX 40-series GPUs introduce a plethora of groundbreaking technologies, some of which we are unable to compare against their counterparts in the previous generation of graphics cards — a prime example being DLSS 3. A succinct overview reveals that DLSS 3 introduces a pivotal innovation known as Frame Generation. This feature leverages an augmented Optical Flow Accelerator fixed function unit, exclusive to Ada GPUs, to fulfill its computational demands. It’s noteworthy that Ampere and even Turing GPUs also possess OFAs, albeit less potent ones that seemingly struggle to meet the computational requisites of Frame Generation. However, Nvidia’s assertion stands unverified, as we lack the means to either substantiate or disprove this claim.

DLSS 3, apart from its advancements, retains compatibility with DLSS 2. In other words, if you possess an RTX 20- or 30-series graphics card, it will default to conventional DLSS 2 Super Sampling. The slate of preview games furnished by Nvidia grants users access to a toggle for Frame Generation, and in some instances, its deployment isn’t contingent on enabling DLSS. This attribute could prove invaluable in games that are inherently CPU-bound, such as Flight Simulator. Moreover, as an added boon exclusive to Nvidia GPU owners, the implementation of DLSS 3 necessitates the incorporation of Nvidia Reflex. This augmentation can significantly curtail total system latency in games equipped to harness its capabilities.

Nvidia’s revelation that over 35 games and applications are already in the process of integrating this technology is certainly noteworthy. Given the discernible advantages we’ve encountered, it’s reasonable to anticipate the enlistment of many more participants onto this roster in the forthcoming years.

The mechanics of Frame Generation entail the utilization of the preceding frame and the current frame to generate an intermediary frame. Consequently, every alternate frame is effectively generated via Frame Generation, with the GPU drivers orchestrating frame outputs to maintain a consistent rhythm. It’s pertinent to recognize that this approach effectively introduces an additional frame of latency, accompanied by supplementary overhead. However, the incorporation of Reflex effectively mitigates the latency implications vis-à-vis DLSS 2 without Reflex. In essence, Nvidia reallocates the latency gains from Reflex to facilitate Frame Generation.

Under the best possible circumstances, Frame Generation holds the potential to theoretically double a game’s framerate. Nevertheless, it’s important to acknowledge that this technology doesn’t augment the number of frames generated by the CPU, nor does it introduce additional input sampling. Nonetheless, the result is that games exhibit smoother visuals, which in turn evokes a subjective sensation of enhanced fluidity. Nevertheless, for competitive esports gaming, Reflex’s lower latency without Frame Generation might still confer a strategic advantage.

Having laid the groundwork, let’s delve into a preview of DLSS 3’s impact across seven distinct games and/or demonstrations.

Nvidia posits that through the utilization of DLSS 3, the performance of the RTX 4090 can potentially scale from 2x to 4x faster compared to the RTX 3090 Ti’s performance employing DLSS 2. While most of the evaluated games and applications were exclusive to the RTX 4090, and temporal limitations imposed constraints on the extent of testing, a broad trend emerged. In a general sense, employing DLSS 2 Performance upscaling (4x upscale, rendering 1080p content upscaled to 4K) approximately doubles the frames per second, particularly in scenarios where CPU limitations are not in play.

DLSS 3’s capabilities encompass instances where the RTX 4090’s performance soars to up to 5x the native output, exemplified in titles such as the Chinese MMO Justice. Notably, the outcomes across various previews exhibit distinct results. The DLSS Feature Test within 3DMark unveils a 3x surge in performance. A Plague Tale: Requiem and F1 2022 witness improvements of up to 2.4x. Cyberpunk 2077 showcases enhancements of up to 3.6x over native output, while the Unreal Engine 5 demo Lyra reaps gains of up to 2.2x. Lastly, Flight Simulator registers a 2x improvement, albeit being a game predominantly impeded by CPU limitations on the RTX 4090. In such a context, a doubling of frame rates indeed translates to a significant enhancement.

The evaluation of DLSS 3’s image quality alongside Frame Generation in comparison to conventional rendering is ongoing. This endeavor necessitates deviations from standard testing procedures, requiring the capture of gameplay at rates exceeding 60 fps to yield meaningful insights. Subjectively, it’s noteworthy to remark that, while contributing to a perceptibly smoother experience, distinguishing between rendered and generated frames during gameplay is a challenging feat.

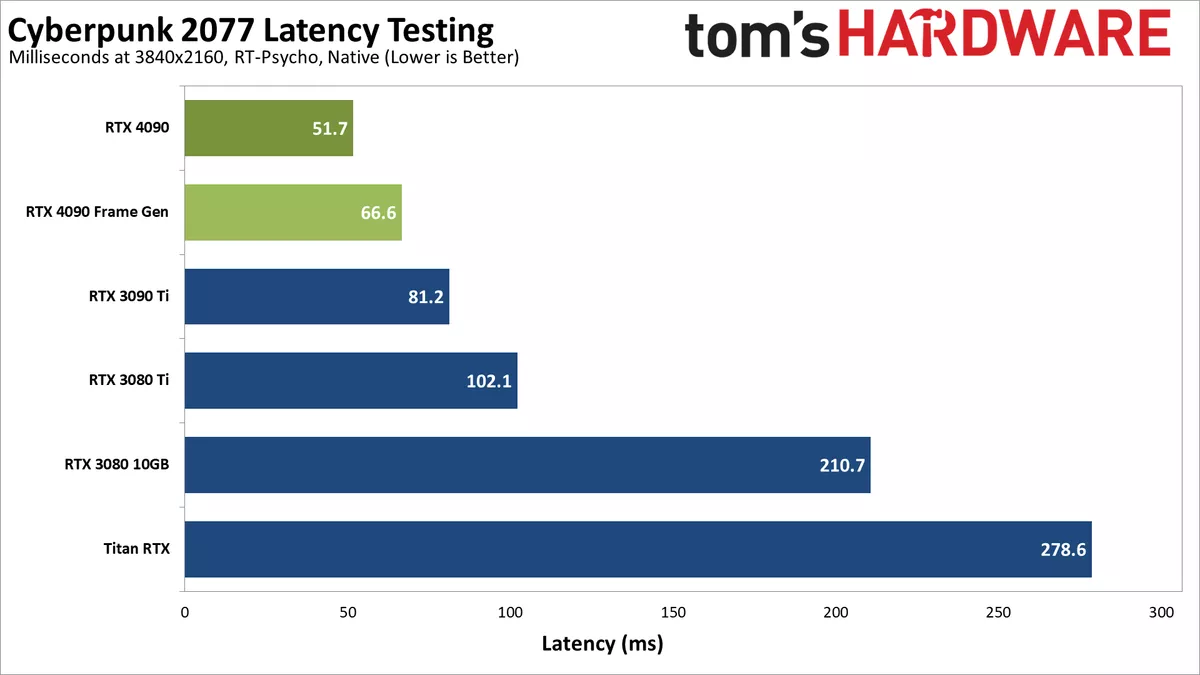

For our latency assessment encompassing Frame Generation, we turned to the preview edition of Cyberpunk 2077. Leveraging Reflex, we ensured compatibility with both RTX 30-series GPUs and the RTX 4090, enabling the capture of performance data using Nvidia’s FrameView utility. Operating at maximum settings, we conducted tests at 4K with RT-Ultra and Ray Traced Lighting set to the Psycho level. The inclusion of Reflex was consistent across all GPUs, mirroring the conditions most gamers will encounter.

Latency shares a direct relationship with frame rate, inherently translating to lower latency with swifter GPUs. This pattern is discernible in the native results, where the RTX 4090 yields a latency of 52ms compared to the 81ms registered by the 3090 Ti. This trend further intensifies with the Titan RTX — chosen for its representation of the fastest 20-series GPU and its 24GB VRAM — clocking in at 279ms. The RTX 4090, with Frame Generation in play, reports latency of 67ms, akin to the delay induced by a single frame if the game ran at 60 fps.

Upon enabling DLSS Quality upscaling, the performance of the RTX cards nearly doubles, accompanied by a notable reduction in latency. The latency for the RTX 4090 subsequently dwindles to 34ms, or 46ms with Frame Generation. This discrepancy amounts to a 12ms variance, with DLSS 3 operational at 106 fps. Importantly, the RTX 4090, when employing DLSS 3, aligns its latency with that of the 3090 Ti utilizing DLSS 2, whilst delivering more than twice the performance.

In a culmination, with Performance mode upscaling, the RTX 4090 — with Frame Generation engaged — somewhat surpasses the latency of a 3090 Ti. Nevertheless, the RTX 4090 still commands a frame rate more than double that of the latter. For users equipped with a 144 Hz 4K display, mirroring our testing setup, DLSS 3 grants a perceptibly improved visual experience and a slightly augmented smoothness. While the difference might not be monumental, the prospect of triple-digit frame rates at 4K within such a demanding game is undeniably gratifying.

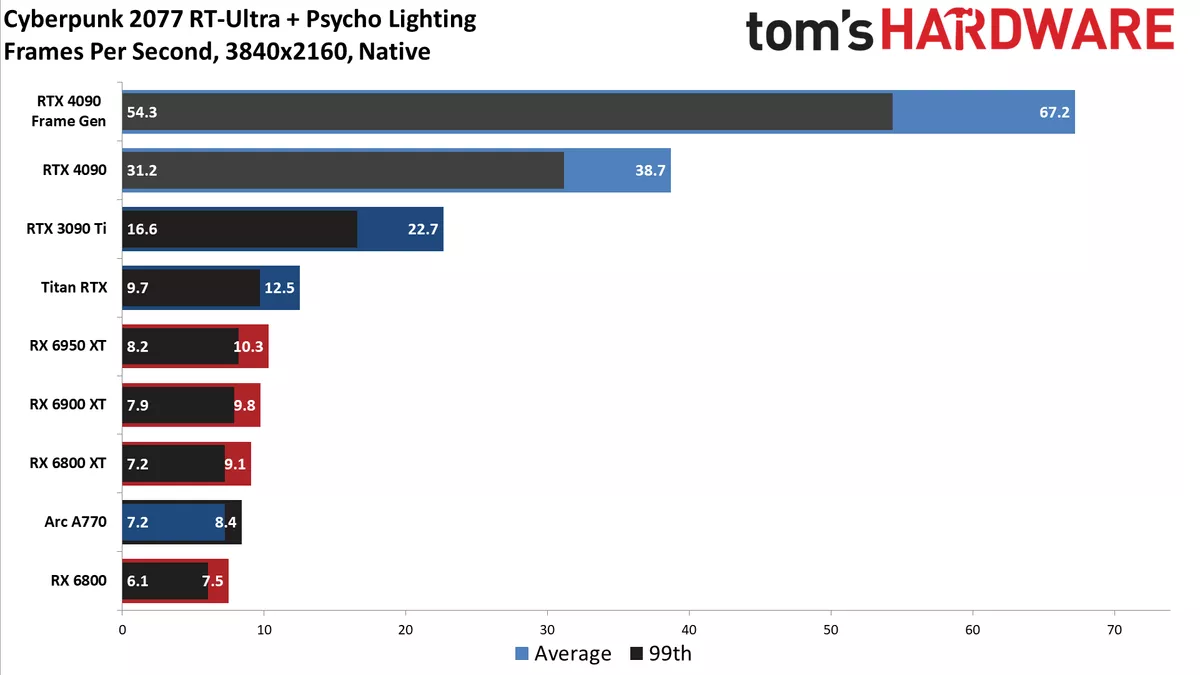

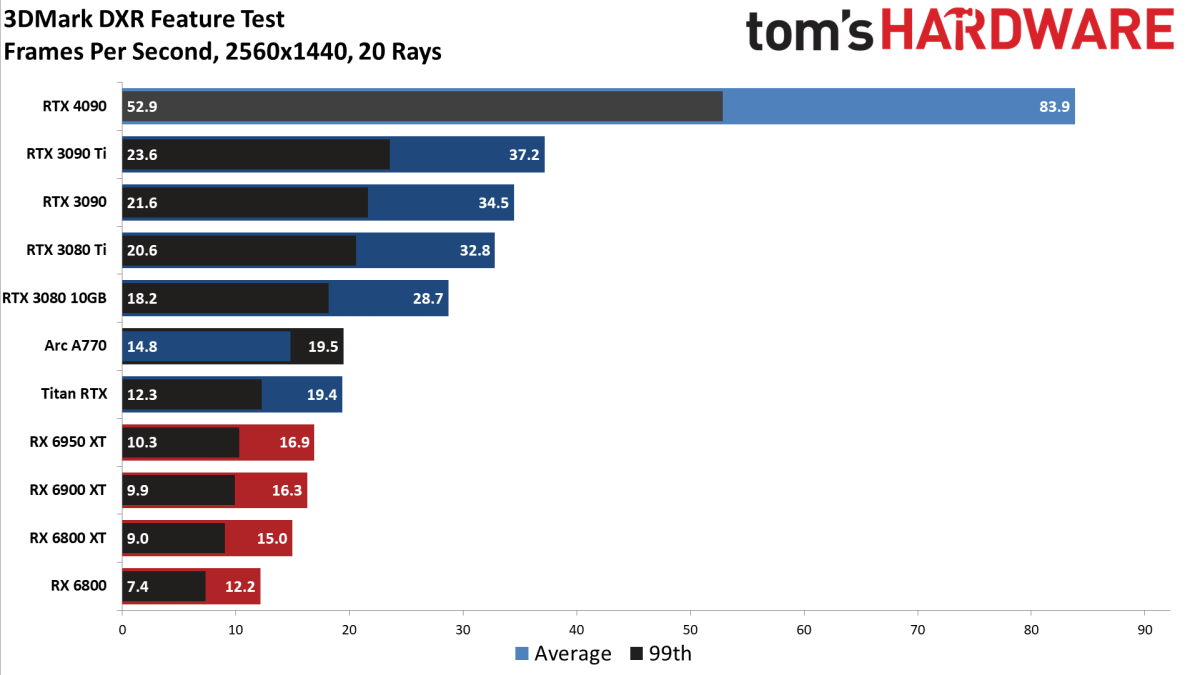

The culmination of our exploration involves showcasing the relative performance of the fastest GPUs offered by Nvidia, AMD, and even Intel, as they engage in the realm of pure ray tracing performance — or as close an approximation to “pure” RT as can be achieved. Our assessment was executed through the utilization of the same settings as our latency testing: Cyberpunk 2077’s RT-Ultra settings coupled with Psycho Lighting. The array featured several AMD GPUs, along with the inaugural appearance of Intel’s Arc A770. Additionally, we employed the 3DMark DXR Feature Test, set to 20 rays, which notably embraces full ray tracing as opposed to hybrid rendering.

In the context of the 3DMark DXR Feature Test, the conspicuous ascendancy of the RTX 4090 comes as no surprise. Evidently, the RTX 4090 outperforms all other GPUs by a substantial margin. It’s crucial to underscore that this evaluation is undertaken devoid of Ada’s SER, OMM, and DMM features being integrated into the test, thus attributing the differentiation between the 4090 and the 3090 Ti to the utilization of more proficient RT cores in greater abundance.

As we traverse the chart, the positioning of Intel’s Arc A770 might prompt a quizzical raise of eyebrows — an outcome aligned with our sentiments. Remarkably, despite wielding only 32 RTUs operating around 2.35 GHz, Intel’s inaugural foray into ray tracing hardware manages to rival 84 of Nvidia’s first-generation RT cores. While this level of parity may not universally materialize across all ray tracing-supported games, it’s also evident that all of AMD’s premier RX 6000-series GPUs fall short in comparison to both the Arc A770 and the Titan RTX. It’s noteworthy to acknowledge that this underlines the rationale behind assertions that AMD’s initial RT hardware might be perceived as relatively underpowered.

Turning our focus to Cyberpunk 2077, the scenario assumes a somewhat different complexion, albeit not fundamentally altering the fact that nothing below the RTX 3090 Ti attains remotely viable frame rates when confronted with maxed out settings and 4K resolution. Notably, the RTX 4090 attains 39 fps at native resolution, an attainment that nearly doubles when Frame Generation enters the equation, yielding 67 fps.

In the grand vista of GPU performance, the interplay of ray tracing capabilities unfolds as a captivating and intricate narrative.

We’ve further embarked on comparative assessments, juxtaposing DLSS modes with FSR modes, albeit acknowledging the inherent disparities between the two technologies. For these analyses, we’ve aligned DLSS Quality upscaling approximately with FSR Ultra Quality, while likening DLSS Performance to FSR Quality mode. While these associations may not be exact, our intention was to explore the possibility of rendering non-RTX cards functional at playable performance thresholds. The results, however, dispel such hopes.

Within this landscape, the RX 6950 XT peaks at a mere 23 fps with FSR Quality mode. For a perceptible frame rate increment, we’d need to regress to Balanced or Performance mode, albeit at the cost of compromised visual fidelity. It’s pertinent to acknowledge that the Arc A770, although not maintaining pace with AMD’s swifter GPUs, outpaces the RX 6800 across all three assessments.

In summary, Nvidia’s elevation of ray tracing hardware performance with Ada Lovelace is palpable. This affirmation stems not only from our architectural analysis but also from tangible empirical evidence, underscoring the profound strides that have been accomplished.

GeForce RTX 4090: Professional and Content Creation Performance

The integration of GPUs extends well beyond gaming, encompassing domains such as professional applications, AI training, inferencing, and beyond. With a focus on broadening our GPU assessment horizons, particularly in the realm of formidable GPUs like the RTX 4090, we’re actively seeking your insights regarding a pertinent machine learning benchmark that strikes a balance between robustness and efficiency.

While pursuing this endeavor, our current repertoire comprises a selection of 3D rendering applications, which capitalize on the prowess of ray tracing hardware. Additionally, we’ve incorporated the comprehensive SPECviewperf 2020 v3 test suite, aligning our evaluation approach with the multifaceted nature of contemporary GPU utilization.

Your input, as a catalyst for informed decision-making, would be invaluable in steering our course toward comprehensive and meaningful GPU testing endeavors.

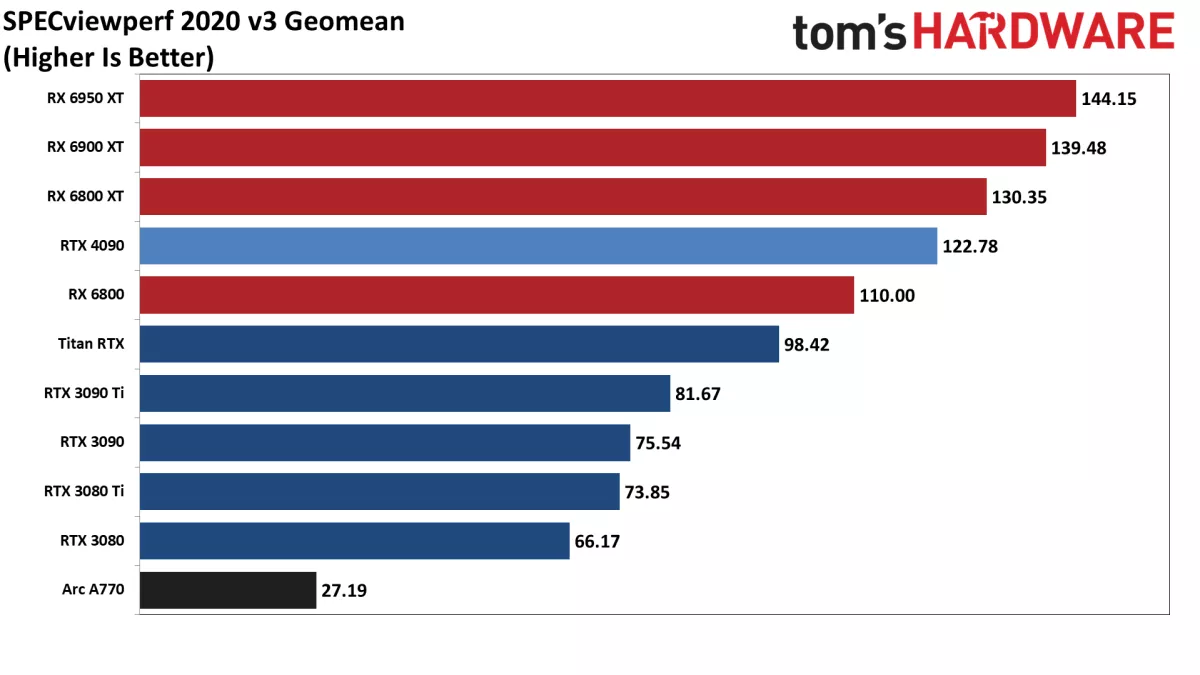

SPECviewperf 2020 constitutes an assemblage of eight distinct benchmarks, each serving as a distinctive facet of performance evaluation. Noteworthy is the inclusion of an “overall” chart, fashioned by employing the geometric mean of the eight individual outcomes, thereby yielding an aggregate score. It’s crucial to underline that this amalgamated score isn’t an official metric, but rather a construct that accords equivalent significance to each test, proffering a panoramic appraisal of performance. Nevertheless, the practicality of these results hinges on the alignment with your intended application usage, since professionals tend to deploy specific programs pertinent to their work.

Recent discussions by AMD have spotlighted enhancements to their OpenGL and professional drivers. While elements of these enhancements have been integrated into the consumer Radeon Adrenalin 22.7.1 and subsequent iterations, we’ve undertaken a thorough reevaluation, factoring in the latest available drivers across all GPUs for the depicted charts. Notably, AMD’s RX 6000-series GPUs have demonstrated a comprehensive performance enhancement ranging between 65 to 75 percent, when juxtaposed with our previous assessment during the RX 6950 XT review. These advancements are highlighted by a doubling of catia-06 performance and a quadrupling of snx-04 performance.

Remarkably, these novel AMD drivers have propelled a majority of their GPUs to surpass even the RTX 4090 within our overarching SPECviewperf 2020 v3 Geoman chart. On a granular level, examinations of individual charts delineate that the RTX 4090 continues to dominate in 3dsmax-07, energy-03, maya-06, and solidworks-07 benchmarks. If these applications constitute integral elements of your professional repertoire, it is prudent to consider these outcomes. Furthermore, it’s worth noting that Nvidia’s professional RTX cards, exemplified by the RTX 6000 48GB variant, can yield substantial enhancements attributed to their fully optimized professional drivers. (The performance of the Titan RTX in snx-04 provides a glimpse of the potential of Nvidia’s professional drivers.)

With clarity as our guiding principle, we continue to articulate our findings in the pursuit of facilitating informed decisions and comprehensive comprehension of the ever-evolving landscape of GPU performance and utilization.

The SPECviewperf outcomes for Intel’s Arc A770 unmistakably fall within the realm of mediocrity. Its positioning consistently resides at the bottom of the majority of charts, at times even with a considerable discrepancy (as evidenced in snx-04 and solidworks-07 benchmarks). There is a potential for amelioration through subsequent driver updates, although certain performance differentials might stem from architectural nuances. Furthermore, it’s pertinent to note that the Arc A770 commands a price point approximately half that of cards like the RX 6900 XT and only a fifth of the cost of the RTX 4090.

By illustrating these observations, we aim to provide a lucid understanding of the Arc A770’s performance within the SPECviewperf framework. The data underscores the nuanced interplay between hardware, drivers, and cost considerations, contributing to a comprehensive overview of its standing in the spectrum of GPU offerings.

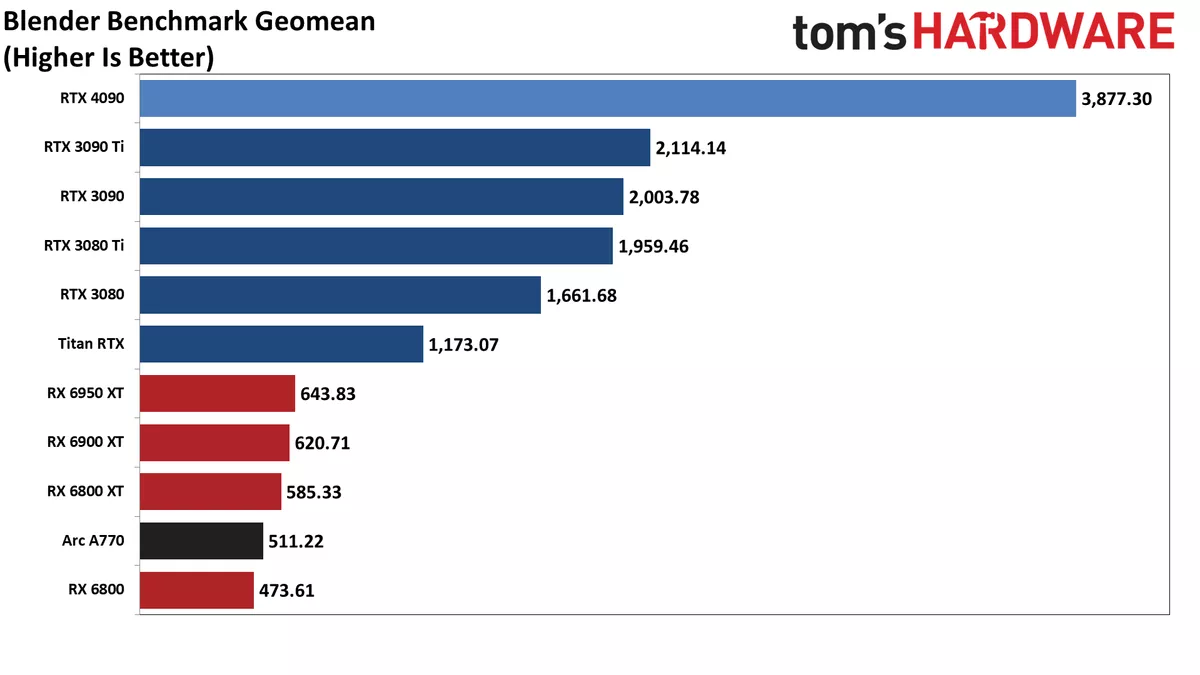

Moving on to Blender, a renowned open-source rendering application that has played a pivotal role in crafting feature-length films, we delve into the latest iteration, Blender 3.30, accompanied by its three distinct tests. This version introduces the groundbreaking Cycles X engine, adept at harnessing ray tracing capabilities from AMD, Nvidia, and Intel Arc GPUs. This is facilitated through diverse interfaces: AMD’s HIP (Heterogeneous-computing Interface for Portability), Nvidia’s CUDA or OptiX APIs, and Intel’s OneAPI.

The open-source nature of Blender offers a significant advantage: the flexibility for different companies to deploy their rendering enhancements. This characteristic permits a more equitable performance comparison across a spectrum of professional GPUs.

Rendering applications, such as Blender, serve as a conduit for spotlighting raw theoretical ray tracing hardware capabilities. In this context, the RTX 4090 yields a substantial generational leap over its predecessor, the RTX 3090 Ti, amounting to an impressive 83% improvement in the overall score. Specifically, across the three test scenes, enhancements of 101% for Monster, 61% for Junkshop, and 91% for Classroom are observed.

However, when considering AMD and Intel GPUs within Blender 3.30, a pronounced performance disparity emerges. Even the swiftest AMD card registers as less than half as swift as the RTX 3080 10GB and lags by 45% compared to the Titan RTX. The Intel Arc A770, on the other hand, ascends marginally above the RX 6800. To put it starkly, the combined performance of the five non-Nvidia GPUs amounts to just about 73% of the RTX 4090’s individual performance — a notable gap.

These insights illuminate the discernible contrasts in rendering prowess among GPUs across the Blender landscape, accentuating both advancements and variances in performance while underscoring the formidable dominance of the RTX 4090.

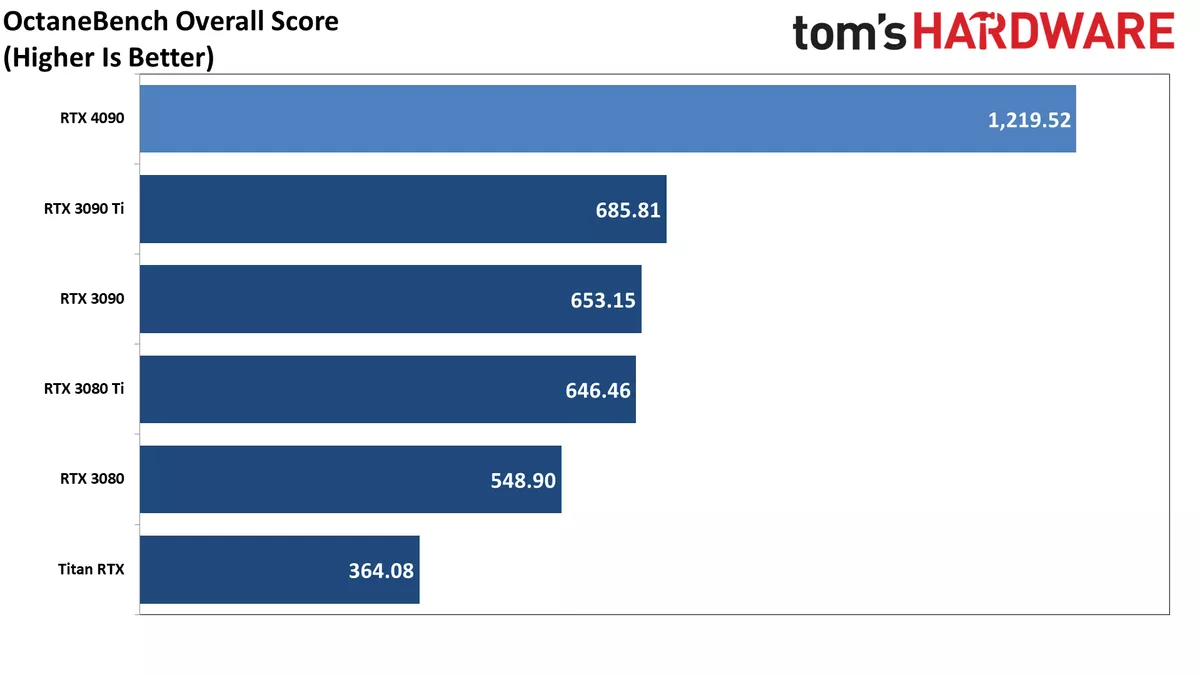

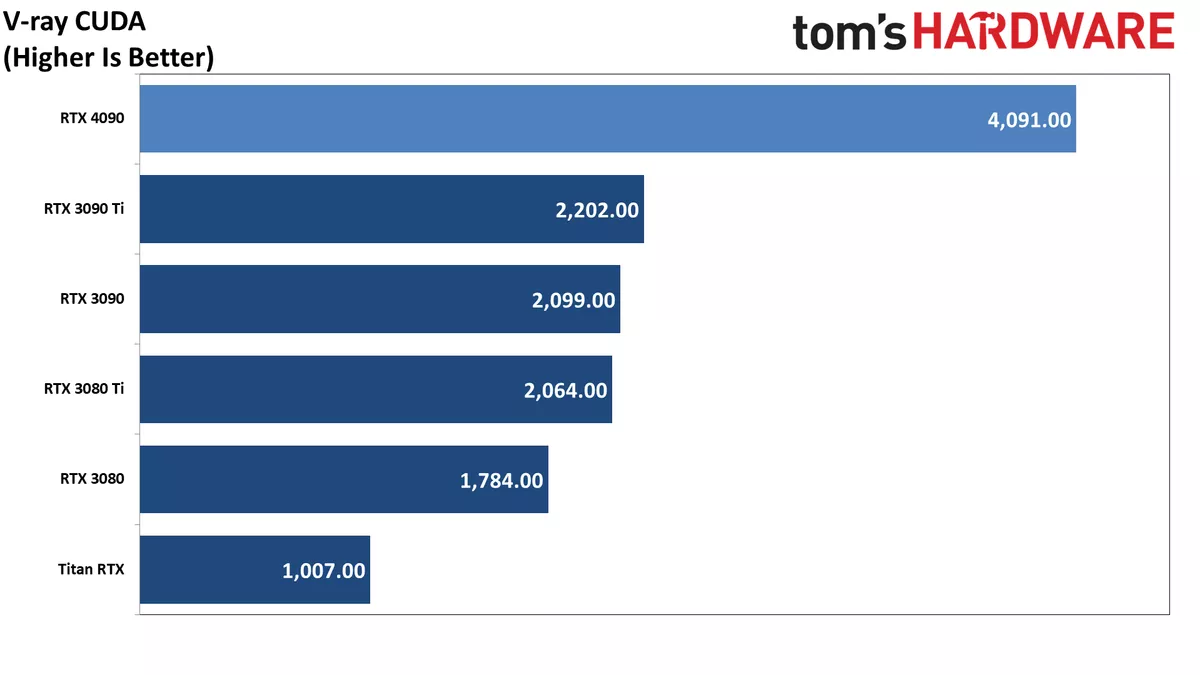

Our final duo of professional tests is restricted to Nvidia’s RTX cards for hardware ray tracing. This limitation stems from the prolonged existence and support of Nvidia’s CUDA and OptiX APIs, surpassing any alternatives. Although OpenCL was once accommodated by Optane, dwindling development efforts, combined with stability concerns, prompted them to cease OpenCL support in subsequent versions. While we’ve queried OTOY and Chaos Group regarding their intentions to include AMD and Intel GPU support, it’s worth noting that these charts exclusively feature Nvidia GPUs. It’s pertinent to acknowledge that OctaneBench’s latest update hails from 2020, whereas the most recent V-Ray Benchmark was released on June 13, 2022.

In congruence with Blender, the RTX 4090’s performance gains in Octane and V-Ray hinge on the rendered scene’s intricacies. The comprehensive OctaneBench outcome stands 78% higher compared to the RTX 3090 Ti. On the flip side, the pair of V-Ray renders exhibit enhancements of 83% and 86%, respectively. For professionals actively engaged in such endeavors, the RTX 4090 could serve as a compelling upgrade proposition.

These assessments underscore the significance of architectural evolution and technological strides, all of which culminate in heightened performance metrics for specialized tasks, setting the stage for a potentially transformative impact on professional workflows.

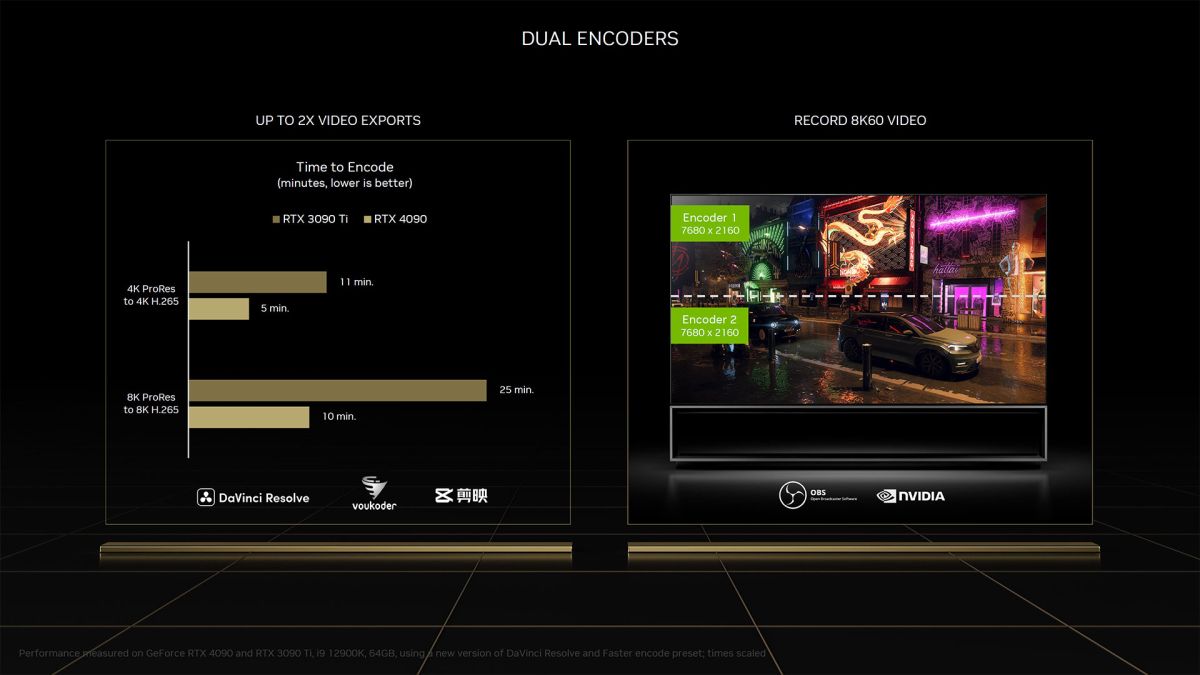

GeForce RTX 4090 Video Encoding Performance and Quality

In a nutshell, Nvidia’s Ada Lovelace architecture introduces the 8th-generation NVENC block, a pivotal addition that encompasses support for AV1 encoding. Moreover, RTX 40-series GPUs boasting 12GB or more of VRAM are furnished with dual NVENC blocks. These dual blocks can be employed to process distinct streams or magnify encoding performance twofold for a single stream. This augmentation reflects Nvidia’s drive to enhance video encoding capabilities and accommodate the evolving demands of multimedia applications.

Indeed, the new NVENC has been designed to facilitate encoding at impressive resolutions, reaching up to 8K at 60 Hz. Although such high resolutions may currently cater to a small niche of users, the capability highlights Nvidia’s commitment to staying ahead of the curve in terms of encoding technology. As technology continues to advance, it’s not unreasonable to speculate that even more ambitious features like 8K at 120 Hz support could become a reality in the future, utilizing the power of both encoders to further push the boundaries of visual quality and performance. And, of course, an 8K display for testing gaming performance would indeed be a welcomed addition for comprehensive evaluations.

GeForce RTX 4090: Power, Temps, Noise, Etc.

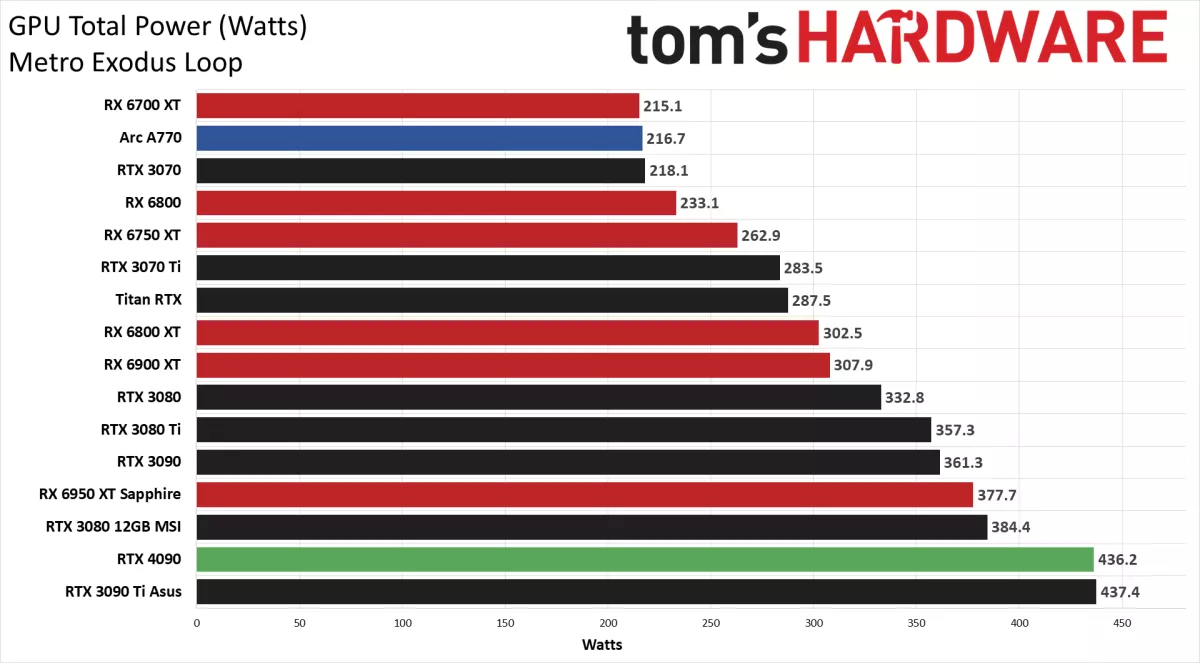

Absolutely, accurately measuring power consumption is crucial for understanding a GPU’s performance and efficiency, and your approach using Powenetics testing hardware and software seems robust. By increasing the resolution and settings to more demanding levels, such as 4K Extreme for Metro and 1440p for FurMark, you’re able to stress the GPU and capture its worst-case power consumption, providing a comprehensive view of its capabilities under intense workloads. This data is essential for users who want to gauge the power requirements of a GPU and make informed decisions regarding their system’s power supply and cooling solutions. It’s clear that your testing methodology is thorough and designed to ensure reliable and meaningful results.

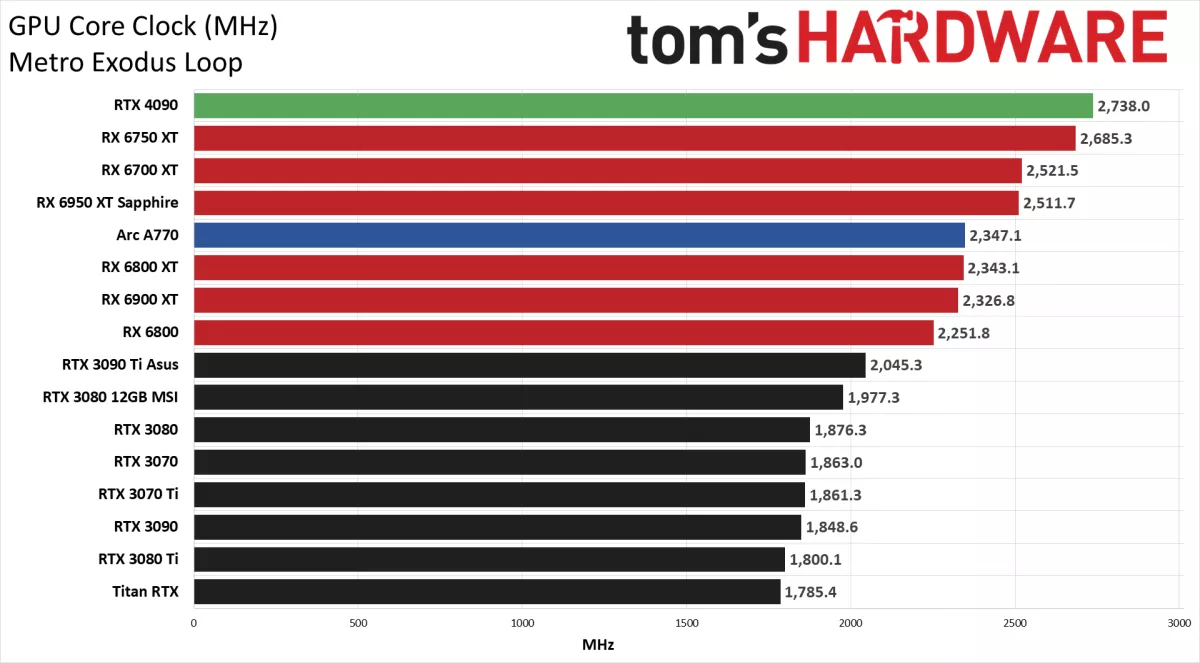

The comparison between the Asus RTX 3090 Ti and the RTX 4090 in terms of power consumption and clock speeds highlights the efficiency improvements achieved with the Ada Lovelace architecture. Despite both cards having a 450W TBP (Total Board Power), the RTX 4090 delivers significantly higher performance while maintaining similar power consumption levels. This underscores the architectural advancements in power efficiency and performance-per-watt that Ada Lovelace brings to the table.

The consistent power draw of around 440W in Metro Exodus and 460W–470W in FurMark for both cards suggests that they are operating within their designed power envelopes. It’s also intriguing to observe the stable clock speeds maintained by the RTX 4090, consistently hovering around 2750 MHz during gaming workloads. This represents a substantial improvement over prior Nvidia GPUs and can contribute to the impressive performance gains seen with the RTX 4090.

The fact that even FurMark, a notoriously power-hungry stress test, doesn’t lead to a significant drop in clock speeds or fan speeds for the RTX 4090 indicates robust thermal management and power delivery systems.

Overall, these findings indicate that the Ada Lovelace architecture is not only pushing performance boundaries but doing so with remarkable power efficiency, making the RTX 4090 a compelling choice for enthusiasts seeking both high performance and reduced power consumption relative to its impressive capabilities.

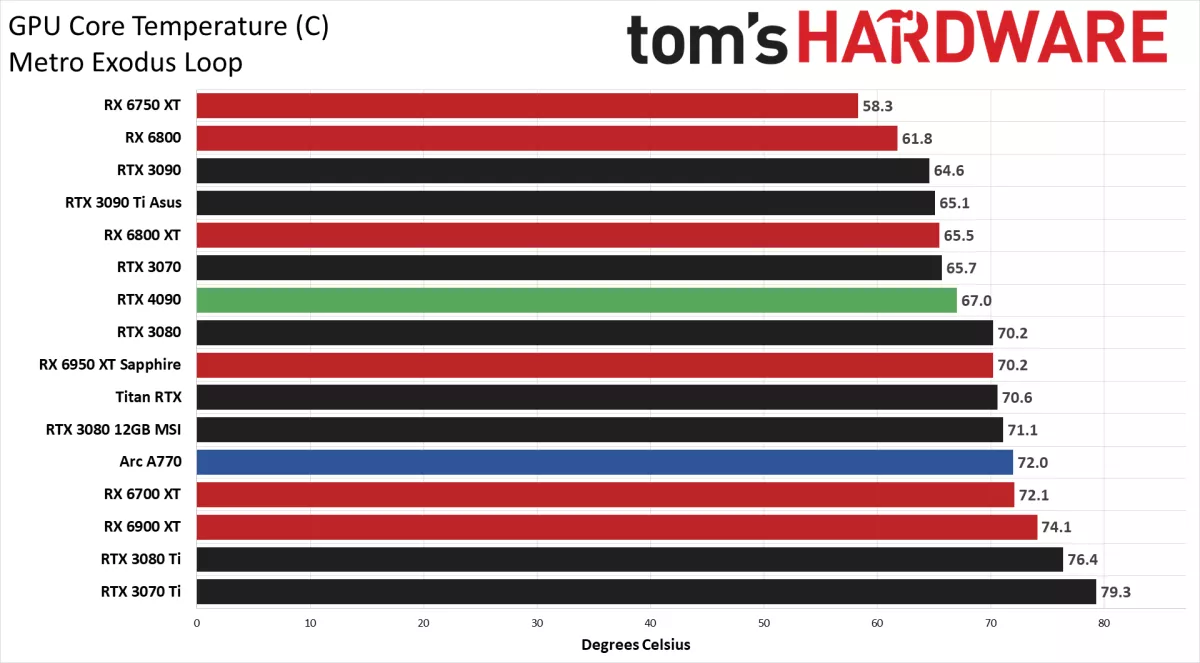

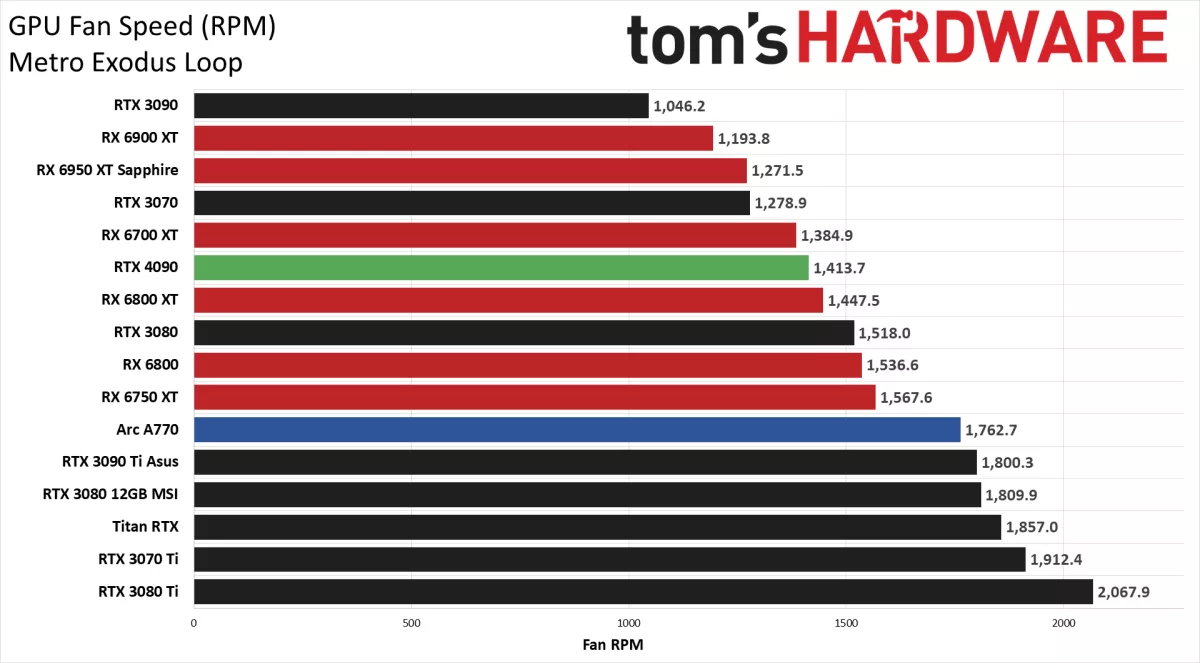

The thermal and acoustic performance of the RTX 4090 Founders Edition demonstrates a balance between temperature management and noise levels, resulting in a relatively positive user experience for gamers and enthusiasts.

Compared to its predecessors, the RTX 4090 showcases a middle ground in terms of temperatures. With an average temperature of 67°C, it’s slightly warmer than the RTX 3090 Founders Edition, but significantly cooler than the Asus RTX 3090 Ti, which targeted around 65°C. This indicates that the thermal management of the RTX 4090 is effective in maintaining operational temperatures within a reasonable range.

The noise levels of the RTX 4090 while gaming, measured at 45.0 dB(A) and around 40% fan speed, seem to be balanced as well. This noise level is a notable improvement compared to the Asus RTX 3090 Ti, which registered at 49.1 dB(A) with a fan speed of 74%. The ability of the RTX 4090’s fans to become louder if required, reaching up to 57.2 dB(A) at 75% fan speed, provides flexibility that can be beneficial in scenarios demanding more aggressive cooling.

Considering the trends in thermal performance and noise levels, it appears that the RTX 4090 Founders Edition strikes a balance that prioritizes efficient cooling without compromising acoustic comfort. This is especially promising for gamers and users who appreciate a quieter gaming experience while still enjoying the benefits of high-performance gaming on the RTX 4090.

In the grand scheme, the RTX 4090 Founders Edition doesn’t fall short compared to the RTX 3090 Ti when examining power consumption in isolation; quite the opposite, it boasts notably superior performance. Our enthusiasm extends to further explorations involving overclocking experiments and the scrutiny of third-party card dynamics. These forthcoming investigations shall grace our attention on the imminent horizon.

An essential aspect to consider is that, although the power consumption of the RTX 4090 might reach 450W, which might appear substantial (indeed, it is), in the context of integrated circuitry, the focus truly pivots towards thermal density. The challenge lies in effectively dissipating 450W within the expanse of a 608mm^2 chip. Surprisingly, this endeavor is not as formidable as it might seem. In fact, it stands as a notably less onerous task compared to cooling a 250W thermal output within the confines of a 215mm^2 chip, which is the quandary faced by the Alder Lake i9-12900K. The predicament becomes even more intricate with Zen 4, where a diminutive 70mm^2 core complex die (CCD) harbors the potential to exert a draw surpassing 140W.

In conclusion, the Nvidia GeForce RTX 4090 reigns supreme as the pinnacle of graphics technology. Its innovative features, unbridled power, and transformative capabilities redefine the standards of gaming and creative experiences. As you venture into the realms of virtual worlds and artistic endeavors, the Nvidia GeForce RTX 4090 stands as your unwavering companion, propelling you toward unparalleled success.

Embrace the future. Elevate your experience. Choose the Nvidia GeForce RTX 4090.